Table of contents

- Meta would prohibit adults from messaging minors if they are unrelated and exclude them from "People You May Know" recommendations. It would prohibit minors from messaging suspicious adults and exclude them from "People You May Know" recommendations.

- If a user is under 16 or 18 in some countries when they sign up for Facebook, the firm will automatically set their privacy settings to be more private by default.

- Meta is also collaborating with the National Center for Missing and Exploited Children (NCMEC) to provide a safe and secure global network.

Meta began by noting that they were delivering an update on safeguarding children from harm and working to create a secure, age-appropriate experience for teens on Facebook and Instagram (1).

Meta provided strategies for preventing minors from communicating with potentially suspicious adults last year, such as prohibiting adults from messaging teens they are not related to or from noticing teens in their "People You May Know" recommendations.

In addition to their current safeguards, they are experimenting with techniques to prevent teenagers from messaging suspicious adults they are not associated with and would not display in teenagers' "People You May Know" recommendations.

A suspect account has recently been reported or blocked by a minor. They are also experimenting with completely deleting the message button from teen Instagram accounts when untrustworthy adults view them as an additional degree of security.

Safety Tools

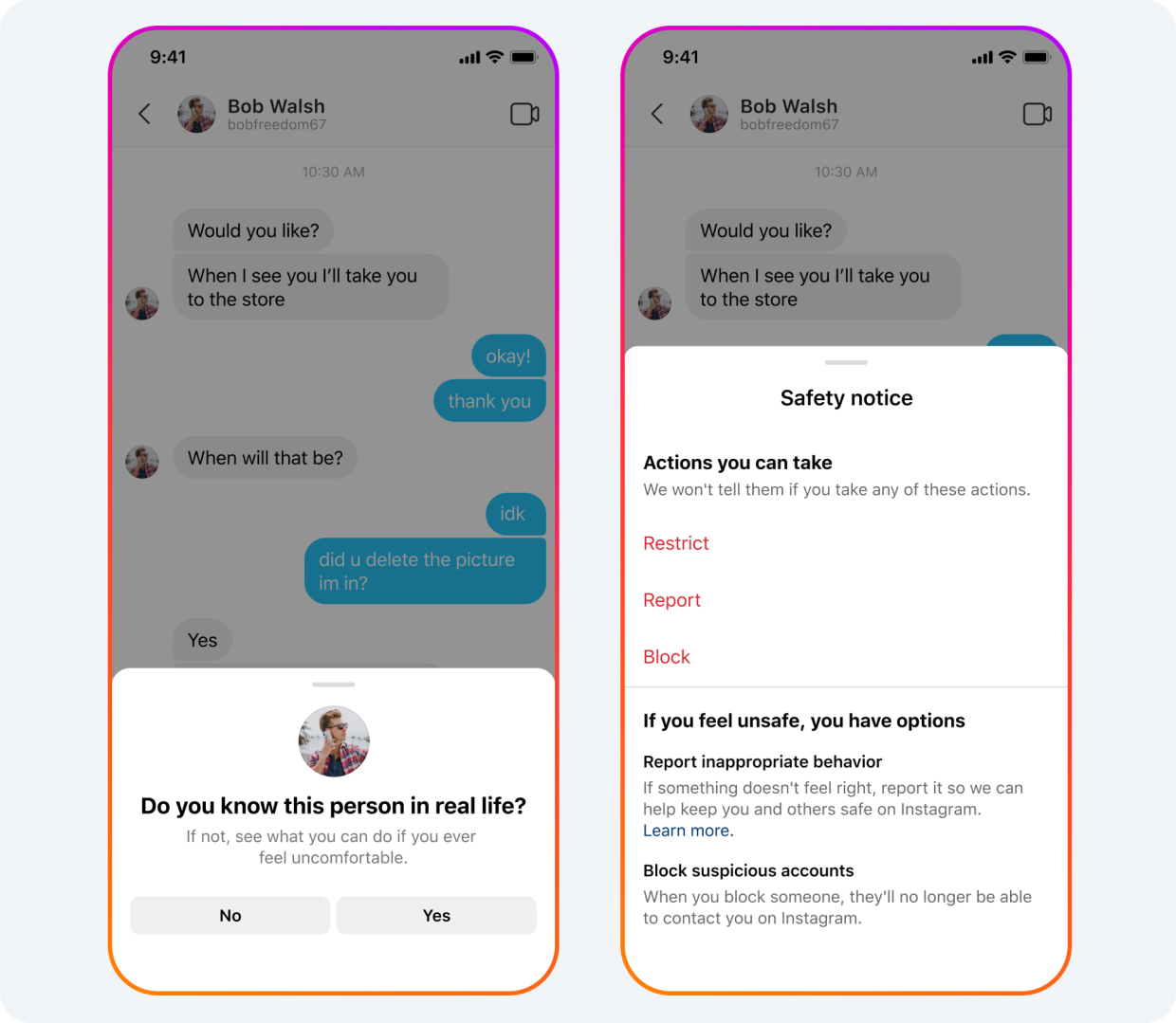

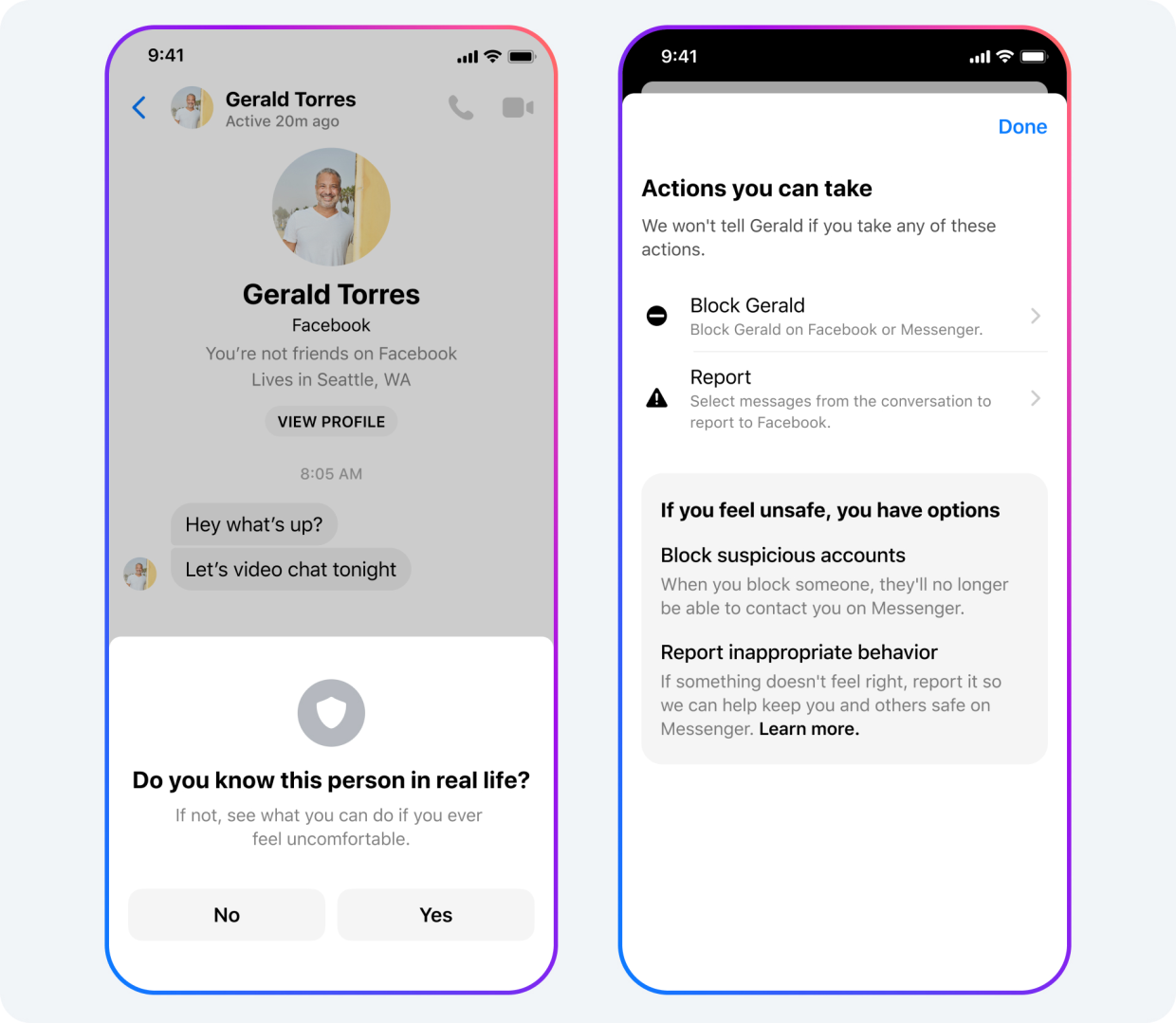

Meta is releasing new notifications that urge kids to use the tools they have built to help them recognize when something in their application causes them to feel uncomfortable.

For example:

Encourage teenagers to report accounts after they block someone and notify them of safety issues with instructions on handling improper adult texts.

In addition to making it simpler for people to find our reporting tools, they also saw an increase of more than 70% in the number of reports they received from minors in Q1 2022 compared to Q1 2021 on Messenger and Instagram DMs. In 2021, more than 100 million people saw safety notices on Messenger.

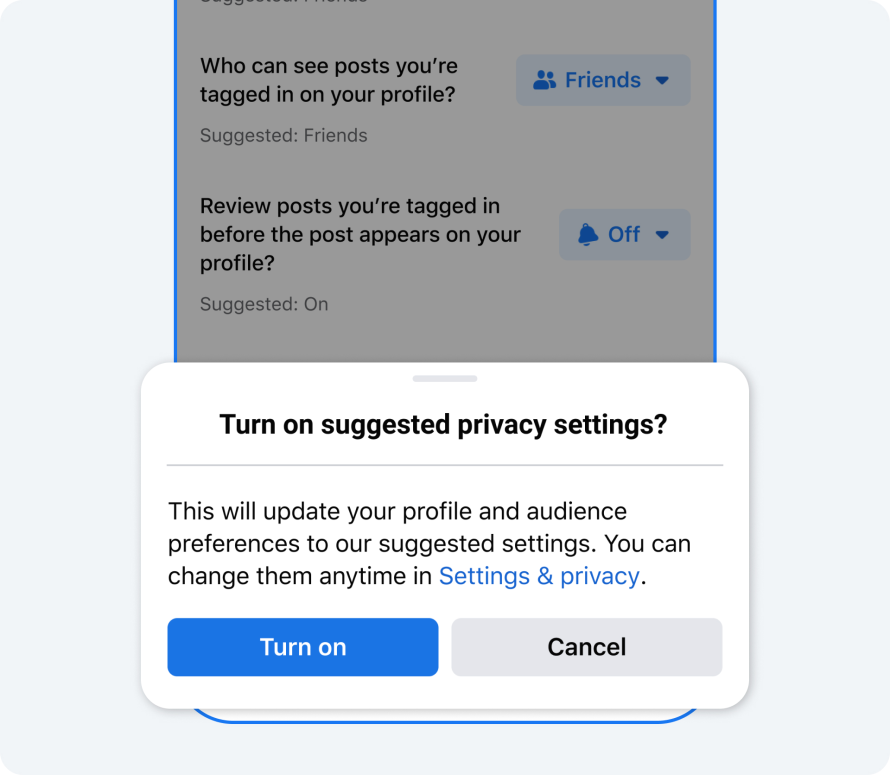

Privacy Defaults for Teens on Facebook

When a person joins Facebook, the business will automatically set their privacy settings to be more private by default if they are under 16 or 18 in some countries. The company will also encourage teens using the app to do the same.

- Who has access to their friend list?

- Who is permitted to read the pages, lists, and persons they follow

- Who may access the posts to which they have been tagged on their profile

- Reviewing posts before they show on their profile that they are tagged in

- Who is permitted to comment on their open posts

This project is in line with Meta's safety-by-design and "Best Interests for the Child" frameworks and follows the company's recent rollout of privacy defaults for teens on Instagram (2).

Meta is also providing an update on the efforts they have been making to stop the online exploitation of teenagers, known as "sextortion," in particular when intimate photographs of teens are being distributed.

Intimate image sharing without consent can be incredibly distressing. Yet, Meta does not want to make all the necessary adjustments to prevent kids from sharing such photographs on its applications in the first place.

For teens who are concerned that their intimate images are being created and might be shared on public online platforms without their consent, Meta is collaborating with the National Center for Missing and Exploited Children (NCMEC) to create a global platform (3).

This will enable them to prevent teens' private photos from being posted online and can be applied by other businesses in the tech sector.

To develop the platform and ensure it meets the needs of teens so they can reclaim control over their content in these circumstances, Meta is closely collaborating with the NCMEC, experts, parents, victim advocates, and academics worldwide.

Additionally, Meta is collaborating with Thorn and their NoFiltr brand to develop teaching resources that will encourage teenagers to seek help if they have shared personal photographs or are the victims of sextortion, as well as to lessen the shame and stigma associated with such acts (4).

To encourage individuals to pause and think before sharing such photos online and to report them to Meta instead, Meta is also preparing to launch a new PSA campaign.